1. 오픈소스 다운로드

https://github.com/oobabooga/text-generation-webui

GitHub - oobabooga/text-generation-webui: A Gradio web UI for Large Language Models. Supports transformers, GPTQ, AWQ, EXL2, lla

A Gradio web UI for Large Language Models. Supports transformers, GPTQ, AWQ, EXL2, llama.cpp (GGUF), Llama models. - GitHub - oobabooga/text-generation-webui: A Gradio web UI for Large Language Mod...

github.com

2. 리눅스에서 Conda 설치(Readme.MD 참조)

curl -sL "https://repo.anaconda.com/miniconda/Miniconda3-latest-Linux-x86_64.sh" > "Miniconda3.sh"

bash Miniconda3.sh

3. Conda 환경설정

conda create -n textgen python=3.11

conda activate textgen

4. Pytorch 설치

| System | GPU | Command |

|------------------|-----------|---------------------------------------------------------------------------------------------------------------------------------|

| Linux/WSL | NVIDIA | `pip3 install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu121` |

| Linux/WSL | CPU only | `pip3 install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cpu` |

| Linux | AMD | `pip3 install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/rocm5.6` |

| MacOS + MPS | Any | `pip3 install torch torchvision torchaudio` |

| Windows | NVIDIA | `pip3 install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu121` |

| Windows | CPU only | `pip3 install torch torchvision torchaudio` |

5. web UI 설치

git clone https://github.com/oobabooga/text-generation-webui.git

cd text-generation-webui

pip install -r <requirements file according to table below>

| GPU | CPU | requirements file to use |

|-----------|-----------------|-----------------------------------------------|

| NVIDIA | has AVX2 | `requirements.txt` |

| NVIDIA | no AVX2 | `requirements_noavx2.txt` |

| AMD | has AVX2 | `requirements_amd.txt` |

| AMD | no AVX2 | `requirements_amd_noavx2.txt` |

| CPU only | has AVX2 | `requirements_cpu_only.txt` |

| CPU only | no AVX2 | `requirements_cpu_only_noavx2.txt` |

| Apple | Intel | `requirements_apple_intel.txt` |

| Apple | Apple Silicon | `requirements_apple_silicon.txt` |

* CPU AVX 사용여부 확인 https://learn.microsoft.com/en-us/sysinternals/downloads/coreinfo

6. 서버 기동

python server.py

7. AI 띄우기

http://127.0.0.1:7860

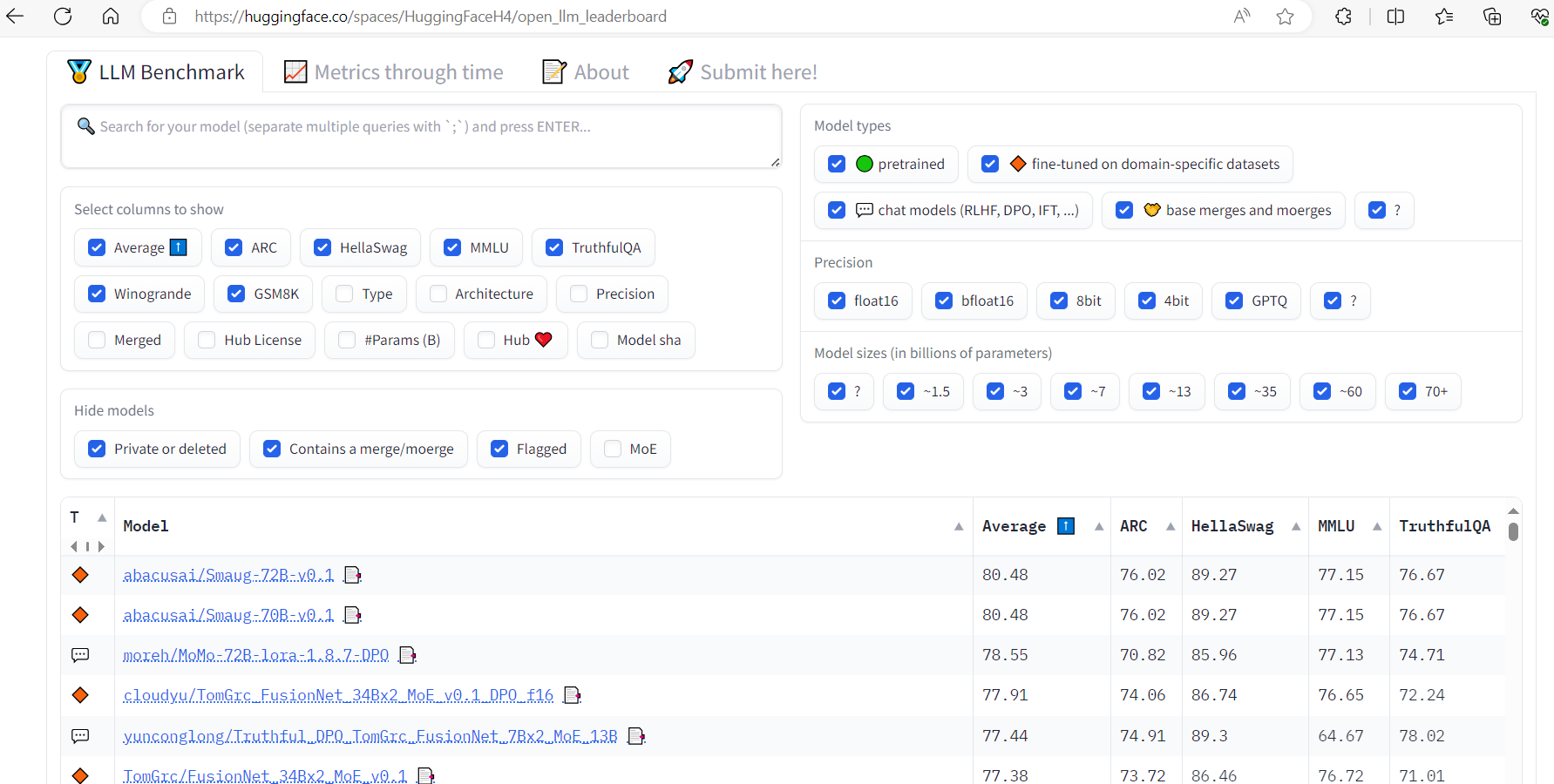

8. 공개된 AI 검색

https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard

Open LLM Leaderboard - a Hugging Face Space by HuggingFaceH4

* average 순으로 정렬(높은 순으로 좋음)

* 파라미터 많을수록 PC에서는 구동 안함

* upstage__SOLAR-10.7B-Instruct-v1.0 사용함.(PC용 추천) -- 10.7 Billion

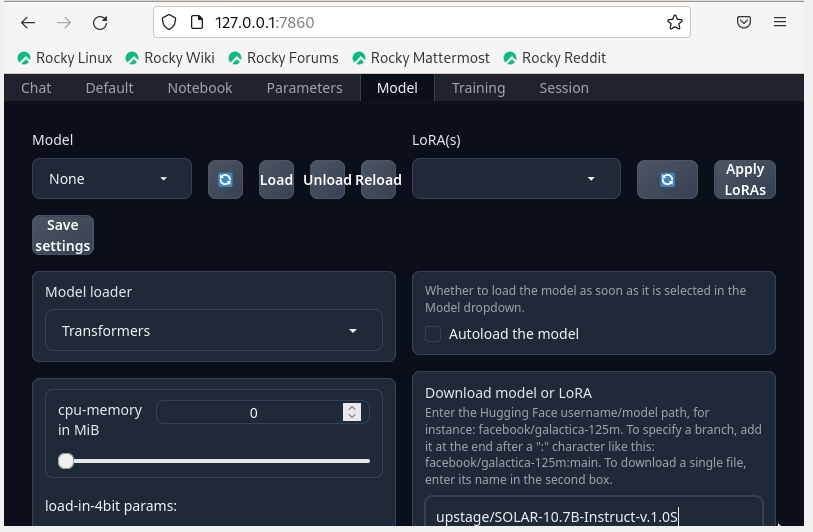

10. upstage/SOLAR-10.7B-Instruct-v1.0 다운로드 및 모델 적용

Model > Download model or LoRA > 입력창 > upstage/SOLAR-10.7B-Instruct-v1.0 > Download 버튼 클릭

참고1. https://github.com/oobabooga/text-generation-webui

Text generation web UI

A Gradio web UI for Large Language Models.

Its goal is to become the AUTOMATIC1111/stable-diffusion-webui of text generation.

Features

- 3 interface modes: default (two columns), notebook, and chat.

- Multiple model backends: Transformers, llama.cpp (through llama-cpp-python), ExLlamaV2, AutoGPTQ, AutoAWQ, GPTQ-for-LLaMa, CTransformers, QuIP#.

- Dropdown menu for quickly switching between different models.

- Large number of extensions (built-in and user-contributed), including Coqui TTS for realistic voice outputs, Whisper STT for voice inputs, translation, multimodal pipelines, vector databases, Stable Diffusion integration, and a lot more. See the wiki and the extensions directory for details.

- Chat with custom characters.

- Precise chat templates for instruction-following models, including Llama-2-chat, Alpaca, Vicuna, Mistral.

- LoRA: train new LoRAs with your own data, load/unload LoRAs on the fly for generation.

- Transformers library integration: load models in 4-bit or 8-bit precision through bitsandbytes, use llama.cpp with transformers samplers (llamacpp_HF loader), CPU inference in 32-bit precision using PyTorch.

- OpenAI-compatible API server with Chat and Completions endpoints -- see the examples.

How to install

- Clone or download the repository.

- Run the start_linux.sh, start_windows.bat, start_macos.sh, or start_wsl.bat script depending on your OS.

- Select your GPU vendor when asked.

- Once the installation ends, browse to http://localhost:7860/?__theme=dark.

- Have fun!

To restart the web UI in the future, just run the start_ script again. This script creates an installer_files folder where it sets up the project's requirements. In case you need to reinstall the requirements, you can simply delete that folder and start the web UI again.

The script accepts command-line flags. Alternatively, you can edit the CMD_FLAGS.txt file with a text editor and add your flags there.

To get updates in the future, run update_linux.sh, update_windows.bat, update_macos.sh, or update_wsl.bat.

Setup details and information about installing manuallyList of command-line flags

Documentation

https://github.com/oobabooga/text-generation-webui/wiki

Downloading models

Models should be placed in the folder text-generation-webui/models. They are usually downloaded from Hugging Face.

- GGUF models are a single file and should be placed directly into models. Example:

text-generation-webui

└── models

└── llama-2-13b-chat.Q4_K_M.gguf

- The remaining model types (like 16-bit transformers models and GPTQ models) are made of several files and must be placed in a subfolder. Example:

text-generation-webui

├── models

│ ├── lmsys_vicuna-33b-v1.3

│ │ ├── config.json

│ │ ├── generation_config.json

│ │ ├── pytorch_model-00001-of-00007.bin

│ │ ├── pytorch_model-00002-of-00007.bin

│ │ ├── pytorch_model-00003-of-00007.bin

│ │ ├── pytorch_model-00004-of-00007.bin

│ │ ├── pytorch_model-00005-of-00007.bin

│ │ ├── pytorch_model-00006-of-00007.bin

│ │ ├── pytorch_model-00007-of-00007.bin

│ │ ├── pytorch_model.bin.index.json

│ │ ├── special_tokens_map.json

│ │ ├── tokenizer_config.json

│ │ └── tokenizer.model

In both cases, you can use the "Model" tab of the UI to download the model from Hugging Face automatically. It is also possible to download it via the command-line with

python download-model.py organization/model

Run python download-model.py --help to see all the options.

Google Colab notebook

Contributing

If you would like to contribute to the project, check out the Contributing guidelines.

Community

- Subreddit: https://www.reddit.com/r/oobabooga/

- Discord: https://discord.gg/jwZCF2dPQN

Acknowledgment

In August 2023, Andreessen Horowitz (a16z) provided a generous grant to encourage and support my independent work on this project. I am extremely grateful for their trust and recognition.

'프로그램 활용 > 인공지능(AI)' 카테고리의 다른 글

| 유용한 ChatGPT 프롬프트 팁 (0) | 2024.03.03 |

|---|---|

| AI 이해 Conda란 (0) | 2024.02.05 |

| ChatGPT는 TensorFlow를 사용합니까? (0) | 2024.01.18 |

| RAG 흐름 (0) | 2024.01.18 |

| 라마2 로컬 컴퓨터에서 (0) | 2024.01.17 |